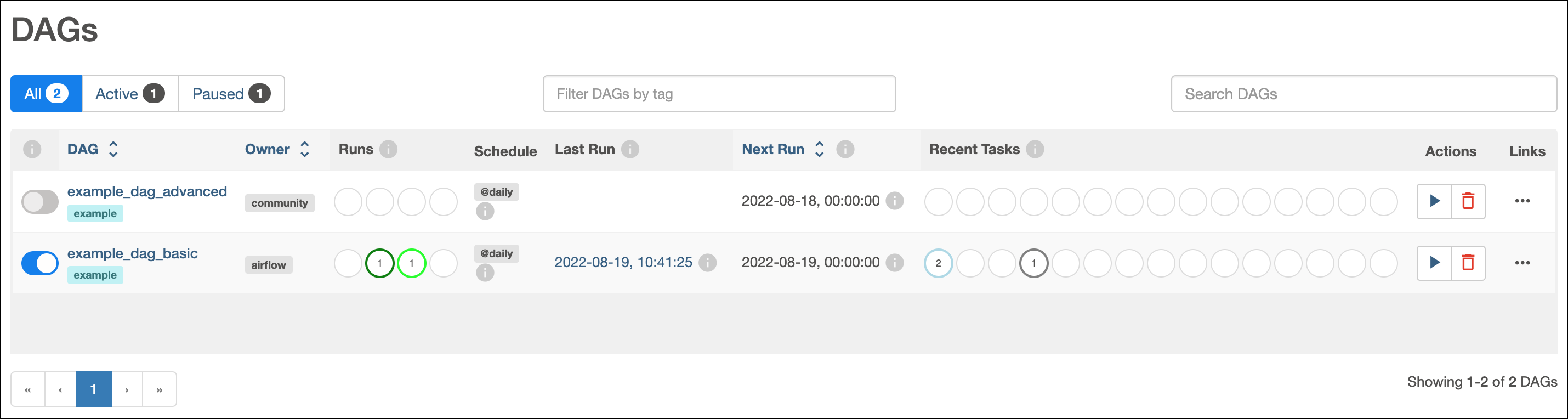

Defaults to """ get_ip = GetRequestOperator ( task_id = "get_ip", url = "" ) ( multiple_outputs = True ) def prepare_email ( raw_json : dict ) -> dict : external_ip = raw_json return, start_date = datetime. datetime ( 2021, 1, 1, tz = "UTC" ), catchup = False, tags =, ) def example_dag_decorator ( email : str = ): """ DAG to send server IP to email. a great solution is scheduling these tasks within Apache Airflow. Schedule interval put in place, the logical date is going to indicate the timeĪt which it marks the start of the data interval, where the DAG run’s startĭate would then be the logical date + scheduled ( schedule = None, start_date = pendulum. Making use of a state-of-the-art DAG scheduler, a query optimizer, and a physical. However, when the DAG is being automatically scheduled, with certain Logical is because of the abstract nature of it having multiple meanings,ĭepending on the context of the DAG run itself.įor example, if a DAG run is manually triggered by the user, its logical date would be theĭate and time of which the DAG run was triggered, and the value should be equal (formally known as execution date), which describes the intended time aĭAG run is scheduled or triggered. Run’s start and end date, there is another date called logical date This period describes the time when the DAG actually ‘ran.’ Aside from the DAG Tasks specified inside a DAG are also instantiated intoĪ DAG run will have a start date when it starts, and end date when it ends. Cron Presets: Whenever our DAG runs in the Airflow Scheduler, each of the runs has a repeated frequency. This Run after a date can be the same as the end of the data interval in our Airflow UI based on our DAG’s timeline. In much the same way a DAG instantiates into a DAG Run every time it’s run, Run After: The earliest time a DAG can be scheduled by the user is represented by the Run after. Run will have one data interval covering a single day in that 3 month period,Īnd that data interval is all the tasks, operators and sensors inside the DAG Those DAG Runs will all have been started on the same actual day, but each DAG The previous 3 months of data-no problem, since Airflow can backfill the DAGĪnd run copies of it for every day in those previous 3 months, all at once. It’s been rewritten, and you want to run it on Same DAG, and each has a defined data interval, which identifies the period ofĪs an example of why this is useful, consider writing a DAG that processes aĭaily set of experimental data. If schedule is not enough to express the DAG’s schedule, see Timetables.įor more information on logical date, see Data Interval andĮvery time you run a DAG, you are creating a new instance of that DAG whichĪirflow calls a DAG Run. The following image shows that the DAG datasetdependentexampledag runs only after two different datasets have been updated. For more information on schedule values, see DAG Run. In the Airflow UI, the Next Run column for the downstream DAG shows dataset dependencies for the DAG and how many dependencies have been updated since the last DAG run.

0 kommentar(er)

0 kommentar(er)